Google Lens already has lots of fancy AR tricks up its sleeve, but it'll soon get what might be its most useful feature yet, called Scene Exploration.

At Google I/O 2022, Google previewed the new feature, which it says acts as a 'Ctrl+F' shortcut for finding things in the world in front of you. Hold your phone's camera up to a scene, and Google Lens will soon be able to overlay useful information on top of products to help you make quick choices.

Google's example demo of the feature was shelves of candy bars, which Lens overlaid with information including not just the type of chocolate (for example, dark) but also their customer rating.

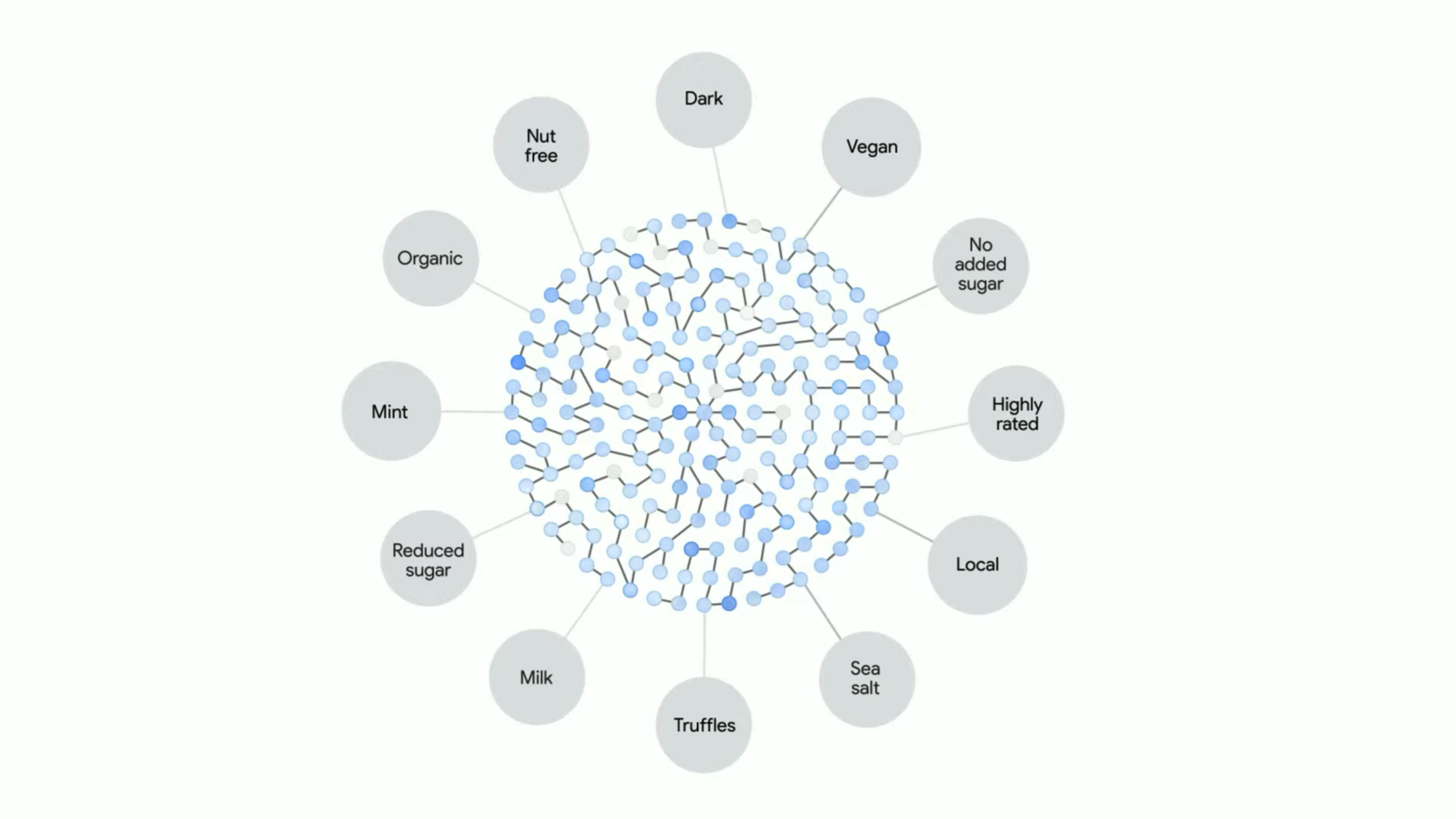

In theory, this Google Lens feature could be super-powerful and a big time-saver, particularly for shopping. And Google says it runs on some smart real-time tech, including knowledge graphs that crunch together multiple streams of info to give you local tips.

The downside? Scene Exploration doesn't yet have a release date, with Google saying it's coming "in the future", with no precise timescale. This means it could be one to file next to Google Lens's earliest promises, which took a few years to mature. But it doesn't look like a huge leap from Lens's existing shopping tools, so we're hoping to see the first signs of it sometime this year.

Analysis: one of AR's most useful tricks so far

There's no doubt that Scene Exploration mode has massive potential for shopping, with old-school browsing in shops likely to increasingly take place from behind a phone screen – or perhaps ultimately, smart glasses.

But Google says it also has more benevolent applications. The feature could apparently help conservationists identify plant species that are in danger of extinction, or give volunteers a handy way to sort through donations.

Either way, it's certainly looks like a powerful and intuitive development of another Lens feature that Google announced at I/O 2022, called Multi-Search. This allows you to combine image search with a keyword to help you find obscure products or objects, without needing to know their name.

Multi-Search arrived in Google Search last month (check in the Search app on Android or iOS), and you'll soon be able to use a more specific version called 'Near Me'. Google's example was taking a photo of a certain dish, and then being able to search local restaurants that serve that particular food.

You could argue that these kinds of features are turning us all into idiots, helplessly reliant on the crutch of Google's powerful Lens and Search tech. But features like Scene Exploration and Multi-Search do look like some of the most useful examples of AR we've seen, and their versatility should prove a boon for all kinds of users.

Now all we have to do is wait to see how long they take to fully materialize on Google Lens.

from TechRadar - All the latest technology news https://ift.tt/Y6yVkjF

No comments:

Post a Comment