Meta will begin flagging AI-generated images on Facebook, Instagram, and Threads in an effort to uphold online transparency.

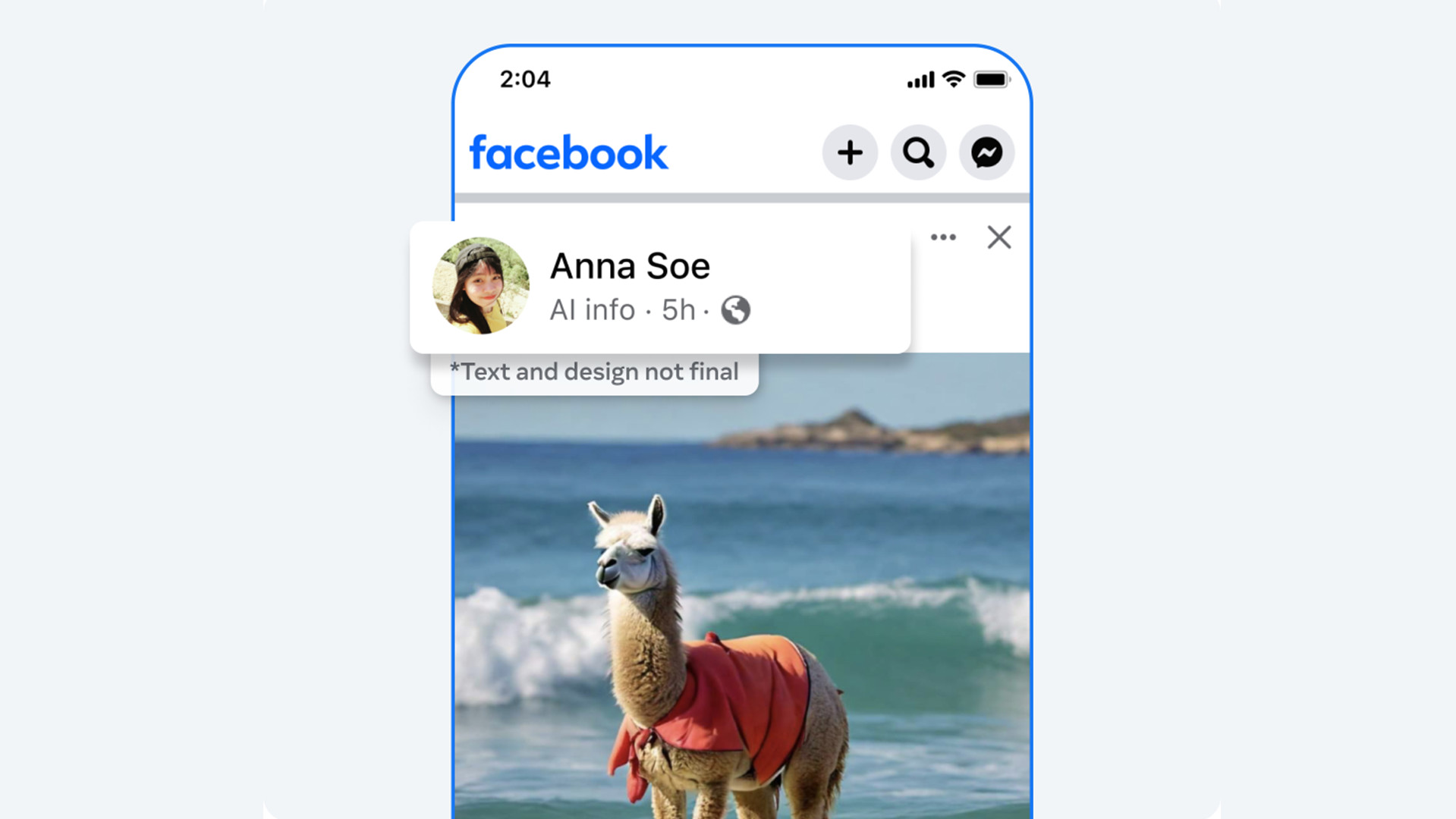

The tech giant already labels content made by its Imagine AI engine with a visible watermark. Moving forward, it’s going to do something similar for pictures coming from third-party sources like OpenAI, Google, and Midjourney just to name a few. It’s unknown exactly what these labels will look like although, looking at the announcement post, it may simply consist of the words “AI Info” next to generated content. Meta states this design is not final, hinting that it could change once the update officially launches.

In addition to visible labels, the company says it’s also working on tools to “identify invisible markers” in images from third-party generators. Imagine AI does this too by embedding watermarks into the metadata of its content. Its purpose is to include a unique tag that cannot be manipulated by editing tools. Meta states other platforms have plans to do the same and want a system in place to detect the tagged metadata.

Audio and video labeling

So far, everything has centered around branding images, but what about AI-generated audio and video? Google’s Lumiere is capable of creating incredibly realistic clips and OpenAI is working on implementing video-creation to ChatGPT. Is there something in place to detect more complex forms of AI content? Well, sort of.

Meta admits there is currently no way for it to detect AI-generated audio and video at the same level as images. The technology just isn’t there yet. However, the industry is working “towards this capability”. Until then, the company is going to rely on the honor system. It’ll require users to disclose if the video clip or audio file they want to upload was produced or edited by artificial intelligence. Failure to do so will result in a “penalty”. What’s more, if a piece of media is so realistic that it runs the risk of tricking the public, Meta will attach “a more prominent label” offering important details.

Future updates

As for its own platforms, Meta is working on improving first-party tools as well.

The company’s AI Research lab FAIR is developing a new type of watermarking tech called Stable Signature. Apparently, it’s possible to remove the invisible markers from the metadata of AI-generated content. Stable Signature is supposed to stop that by making watermarks an integral part of the “image generation process”. On top of all this, Meta has begun training several LLMs (Large Language Models) on their Community Standards so the AIs can determine if certain pieces of content violate the policy.

Expect to see the social media labels rolling out within the coming months. The timing of the release should come as no surprise: 2024 is a major election year for many countries, most notably the United States. Meta is seeking to mitigate misinformation from spreading on its platforms as much as possible.

We reached out to the company for more information on what kind of penalties a user may face if they don’t adequately mark their post and if it plans on marking images from a third-party source with a visible watermark. This story will be updated at a later time.

Until then, check out TechRadar's list of the best AI image generators for 2024.

You might also like

from TechRadar - All the latest technology news https://ift.tt/3E4Uph2

No comments:

Post a Comment